Accelerating Alya engineering simulations by using FPGAs

Success story # Highlights:

- Keywords:

- FPGA

- Exascale

- engineering simulations

- incompressible flow

- GPUs

- Industry sector: Automotive, Aerospace, Manufacturing, Energy

- Key codes used: Alya

Organisations & Codes Involved:

Edinburgh Parallel Computing Centre at the University of Edinburgh has been founded in 1990 and has for objective to accelerate the effective exploitation of novel computing throughout industry, academia and commerce. With over 110 technical staff and managing ARCHER2, the UK national supercomputer, and numerous other systems, EPCC is the UK’s leading supercomputing centre. With over 30 years of experience in HPC and computational simulation, many of EPCC’s staff are involved in projects such as EXCELLERAT exploring techniques to optimise and improve codes on modern and next generation supercomputers.

Alya is a high performance computational mechanics code to solve complex coupled multi-physics / multi-scale / multi-domain problems, which are mostly coming from the engineering realm. Among the different physics solved by Alya we can mention: incompressible/compressible flows, non-linear solid mechanics, chemistry, particle transport, multiphase problems, heat transfer, turbulence modeling, electrical propagation, etc. Alya is one of the only two Computational Fluid Dynamics (CFD) codes of the Unified European Applications Benchmark Suite (UEBAS) as well as the Accelerator benchmark suite of PRACE.

scientific Challenge:

Alya is a simulation code that enables engineers to study highly complex systems including internal combustion engine and aircraft aerodynamics. Such complex simulations require many hours to run on latest generation supercomputers and EXCELLERAT has been exploring ways in which the code can be accelerated for next-generation exascale machines. One important question is what role novel hardware can play in enhancing such important applications, potentially helping to address areas of the code that are not fully suited to traditional CPU architectures. In this work we focused on Alya’s incompressible flow matrix assembly kernel, which not only accounts for a large percentage of the runtime for the Alya benchmarks, but furthermore profiling on the CPU exposed that some of the execution time is spent stalled due to memory and micro-architecture issues; hence the hypothesis being that by moving to a different architecture then these issues could be ameliorated.

Solution:

The blackbox microarchitecture of CPUs and GPUs, which is designed to be general and suit a very wide variety of codes, is fixed. By contrast Field Programmable Gate Arrays (FPGAs) enable developers to tailor the electronics of the chip to the application in question and hence control all aspects of execution. The benefit of this is that issues found on other architectures, such as the stalling of Alya’s incompressible flow matrix assembly routine, can often be addressed by such specialisation and clever use of a combination of memory buffers and concurrent logic.

However the devil is in the details, and converting the existing algorithm, which will be based on Von-Neumann principals, into a dataflow design, requires multiple steps and insight to obtain best performance. This is because FPGAs provide massive on-chip concurrency, which has the raw potential to deliver significant performanec, but one must design their code from the perspective of all parts operating concurrently in a large pipeline, with data continually moving from one part to the next.

Not all parts of Alya’s incompressible flow matrix assembly routine fully suited the FPGA which was a combination of algorithmic issues (facets that simply don’t map as well to the architecture) and also resource usage of the digital signal processor (DSP) engines that are used to implement floating point arithmetic. Consequently we explored a mixed approach, where small parts of the kernel were still resident on the CPU and the rest on the FPGA, with data continually streaming between the two. In this manner we were able to map the workload to the architecture that best suited it.

There is significantly more detail in the paper (linked from the bottom of the success story) that interested readers can refer to for more technical details.

Scientific impact of this result:

As one of the EXCELLERAT’s codes, advances to Alya itself are of primary value, but equally important are general lessons that can be learnt more widely about accelerating HPC codes on FPGAs. These take the form of both case-studies and algorithmic approaches, which are hugely valuable to drive forwards the popularity of this architecture for such HPC workloads.

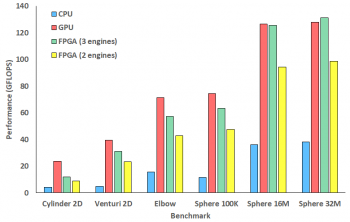

After the code porting we compared performance, power draw, and power efficiency of a Xilinx Alveo U280 FPGA against a 24-core Cascade Lake Xeon Platinum CPU and V100 GPU. Overall, the performance for this ported Alya kernel was impressive, where a single FPGA engine (which can be thought of similarly to a CPU core as we can fit multiple engines on the FPGA) achieved more than twice the performance of the entire 24-core CPU. We then scaled up the number of engines on the FPGA to three, which is the maximum that could be fitted within the resource constraints, and this then performed comparably against the GPU, especially for the larger Alya benchmarks. Keeping the data flowing here is key, and the high-bandwidth memory of the Alveo U280 FPGA is very beneficial in this regard.

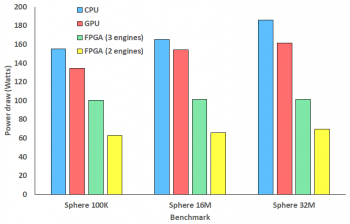

Power is another important consideration, especially as we scale up to exascale and the machines themselves will grow. Running on the FPGA drew significantly less power than the CPU and GPU and hence overall delivered greater power efficiency (work done per unit of power). This is an interesting outcome as, whilst it is generally accepted that FPGAs are more power efficient, the performance benefits combined with the power benefits make FPGAs important for future consideration.

Whilst FPGAs are yet to gain uniquity for accelerating HPC simulation codes, they do have an important role if you pick your battles appropriately and understand the role that FPGAs can provide for ameliorating specific code level issues. There is a lot more detail in the paper about this work which not only assists with Alya but also the popularity of FPGAs for engineering simulations more widely.

Benefits for further research:

- The three-engine FPGA approach outperforms GPUs on the larger benchmarks

- The FPGA configurations drew significantly less power than the CPU and GPU and delivered greater power efficiency, especially for the Sphere 16M and 32M benchmarks.

- FPGAs can help improve non-compute overheads, where GPUs have greater raw floating-point capability but can be limited by overheads imposed by the general-purpose architecture.

PRODUCTS/SERVICES:

- A consultancy on how to port simulation codes to FPGA architectures

- Training on acceleration of HPC kernels on FPGAs

- Optimisation of existing codes kernels by porting to FPGAs

UNIQUE VALUE OF EACH SERVICE:

- FGPAs typically have an order of magnitude more bandwidth and concurrency than other technologies. These raw properties are not always easy to exploit, but if one can then they are able to often obtain significant benefits.

- FPGAs enable a tailor-made effect and support the specialisation of the electronics to the execution of the simulation code

- FPGAs are typically very energy efficient, often requiring significantly less energy to execute a workload when compared against a CPU or GPU. This is the case for the Alya incompressable flow matrix assembly kernel (see second figure).

If you have any questions related to this success story, please register on our Service Portal and send a request on the “my projects” page.

Reference:

Brown, “Porting incompressible flow matrix assembly to FPGAs for accelerating HPC engineering simulations,” 2021 IEEE/ACM International Workshop on Heterogeneous High-performance Reconfigurable Computing (H2RC), 2021, pp. 9-20, doi: 10.1109/H2RC54759.2021.00007.